Autoencoders

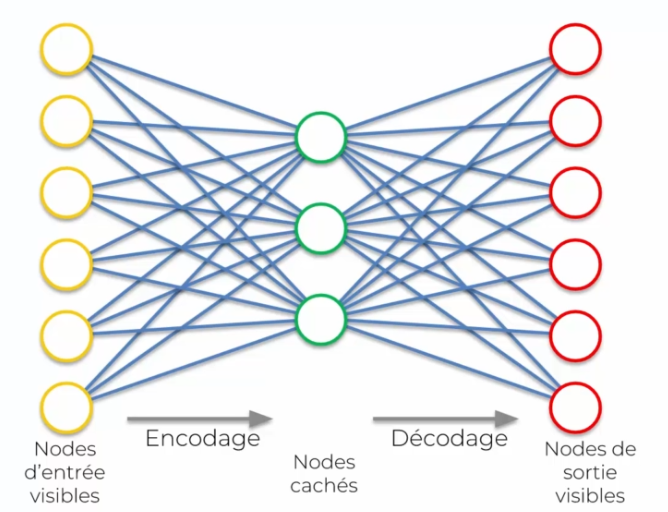

Autoencoders are a type of algorithm that uses neural networks to compress input data into a smaller representation and then decompress it to recover it. The goal is to make the output as close as possible to the original input. This allows specific features or "features" to be detected.

The process takes place in two steps: first, the input is encoded into a hidden layer, then the hidden layer is decoded to obtain the output. Each neuron in the network specializes in finding specific features in the data.

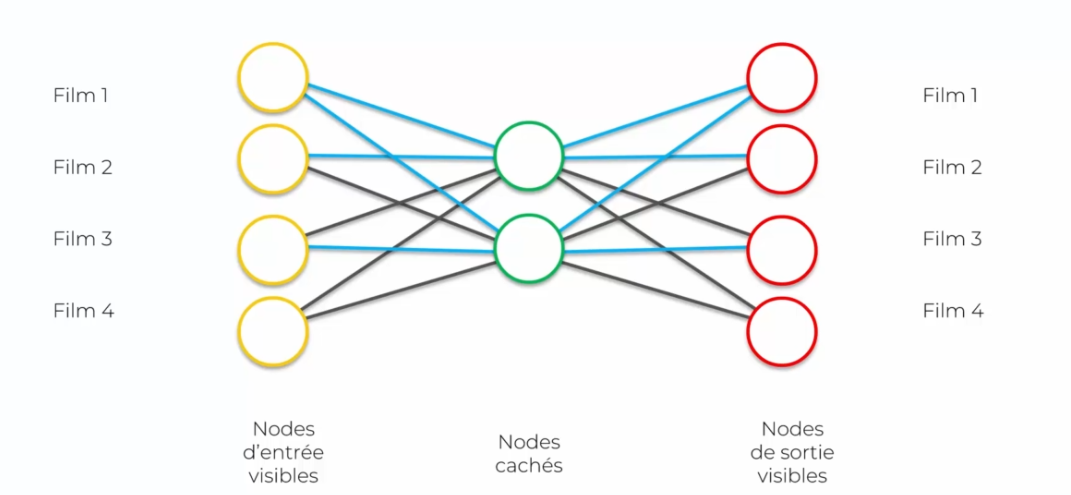

Autoencoders can be used to encode specific data, such as a recommendation system for movies or music. For example, if you enter 6 different types of music, the autoencoder will encode them into a smaller representation. When you want to retrieve this data, the autoencoder will decode it to give you the output.

The goal of this example is to show how the input encoding of movies can be restored intact at the output without loss of information. In this example, a user has rated the movies they have watched.

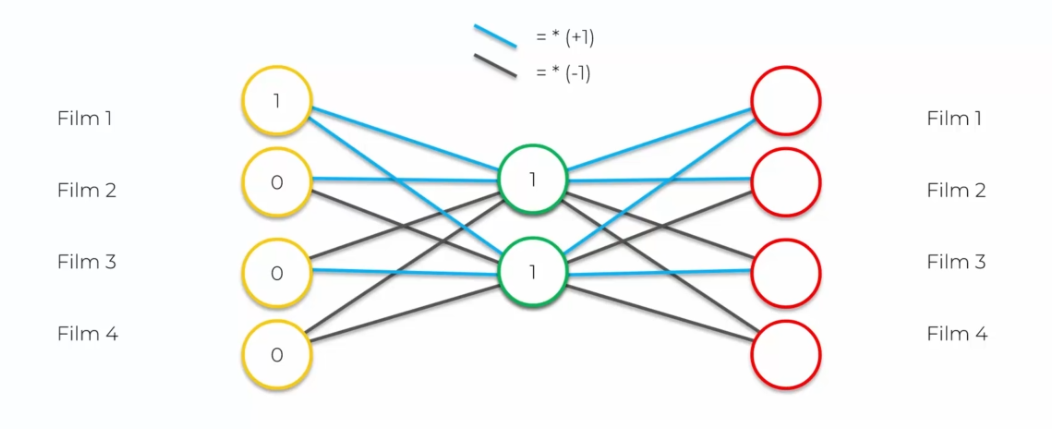

Blue synapses are equal to 1, so we multiply by 1, while black synapses are equal to -1, so we multiply by -1. Using these rules, we can draw links for each input. In our example, the inputs are the rated movies, where 1 represents a liked movie and 0 represents a disliked movie.

By applying the rules for synapses, we can draw links for each rated movie. In our example, we have:

Movie 1 = 1 Movie 2 = 0 Movie 3 = 0 Movie 4 = 0

The encoding of these movies is then passed through the neural network, which will compress the data into a smaller representation and then decompress it to recover it. The goal is to recover the original inputs without loss of information.

The first hidden neuron:

- Movie 1: 1 (rating) * 1 (hidden neuron) = 1

- Movie 2: (0 (rating) * 1 (hidden neuron)) + 1 (previous value) = 1

- Movie 3: (0 (rating) * 1 (hidden neuron)) + 1 (previous value) = 1

- Movie 4: (0 (rating) * 1 (hidden neuron)) + 1 (previous value) = 1

This gives 1, and the same for the outgoing hidden network.

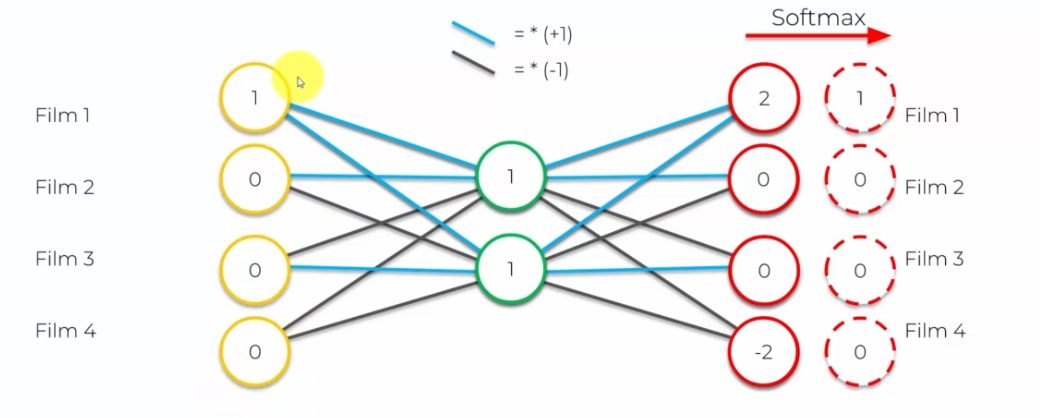

Then we calculate the outputs:

- Movie 1: 2 blue synapses so: 11 + 11 = 2

- Movie 2: 1 blue synapse and 1 black synapse so: 11 + 1-1 = 0

- Movie 3: 1 blue synapse and 1 black synapse so: 11 + 1-1 = 0

- Movie 4: 2 black synapses so: 1*-1 + 1*-1 = -2

Finally, a function called softmax is applied to normalize the output values. This function transforms values that are zero or less than 1 to 0, and values that are equal to or greater than 1 to 1.

Training

Training an autoencoder for movie recommendation can be described in several steps:

-

Step 1: The process starts with a table that contains all the ratings given by users to different movies. Each entry contains a rating (from 1 to 5) for a given movie by a specific user. If a user hasn't rated a movie, the value for that entry is 0.

-

Step 2: The first user enters the network. The input vector x contains all the ratings for all the movies rated by this user.

-

Step 3: The input vector x is encoded into a lower-dimensional vector z using a mapping function f, such as the sigmoid function. This compresses the data.

-

Step 4: The vector z is then decoded into a vector Y of the same dimension as X. The objective is to replicate the vector X in the output, using the decoding process.

-

Step 5: The reconstruction error d(x,y) = ||x-y|| is calculated, which measures the difference between the input and output.

-

Step 6: Backpropagation of the error is done from right to left to update the weights in the network. The learning rate determines the magnitude of the weight updates.

-

Step 7: Steps 1-6 are repeated for each user. The weights are updated after each observation (online learning) or after each group of observations (batch learning).

-

Step 8: Once the entire training set has been passed through the network, this creates one epoch. It is recommended to repeat the process for multiple epochs to improve the recommendation accuracy.

Autoencoders for movie recommendation use an encoding and decoding process to compress the data and replicate the input vectors in the output. Learning is done through backpropagation of the error and weights are updated for each user. Results are improved by performing multiple training epochs.

Too Large Hidden Layer

If the number of neurons in the hidden layer is increased compared to the input layer, this would allow the model to capture more complex and abstract features of the input data. This could help improve the recommendation accuracy, as the model would have a higher representational capacity and be able to capture more subtle nuances of user preferences.

However, adding more neurons can also increase the risk of overfitting, where the model fits too well to the training data and does not generalize well to test data. To avoid this, it is important to use regularization techniques such as pruning some synapses or adding noise, as well as regularly validating the model's performance on test data.

To address this, autoencoders are used. Here are some types of autoencoders:

-

Sparse autoencoders: These autoencoders use a technique called Dropout to prevent overfitting. This technique involves randomly deactivating some neurons during training to prevent the model from getting too accustomed to the input data.

-

Denoising autoencoders: These autoencoders slightly modify the input data to prevent overfitting. This is done by adding noise to the input, such as blur, so that the model cannot simply copy the input data to the output.

-

Contractive autoencoders: These autoencoders add a penalty to the cost function that prevents the network from simply copying the input data to the output. If the network does copy, it will be more severely penalized.

-

Stacked autoencoders: These autoencoders add a new hidden layer instead of new neurons in the same layer. This creates a double encoding and allows for a double filter to detect features.

-

Deep autoencoders: These autoencoders stack restricted Boltzmann machines, which are trained layer by layer and then adjusted with backpropagation. This allows for a more complex and deeper structure to extract richer information from the input data.

In summary, autoencoders are a family of neural networks used to prevent overfitting and extract relevant features from input data. They can use various techniques, such as Dropout, denoising, cost function penalty, stacked hidden layers, and deep network construction, to improve the quality of data representation and increase prediction accuracy.

English

English